Anyone who has set up a smart home knows the routine: one app to dim the lights, another to adjust the thermostat, and a voice assistant that only understands exact phrasing. These systems call themselves smart, but in practice they are often rigid and frustrating.

A new paper by Alakesh Kalita, IEEE Senior Member, suggests a different path. By combining LLMs with IoT networks at the edge, devices could respond to natural language commands in a way that feels intuitive and coordinated. Instead of managing each device separately, a user could issue one broad command and let the system figure out the details.

######

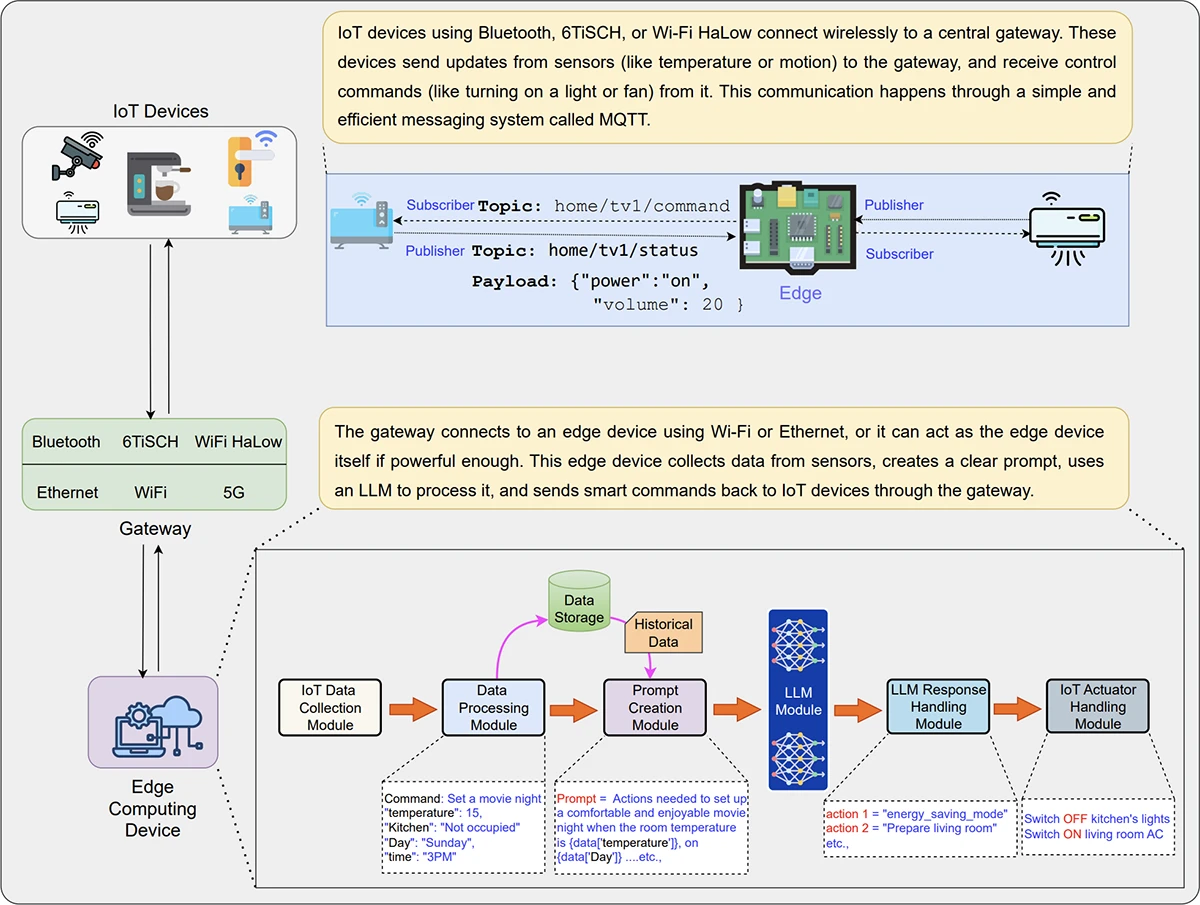

Proposed framework is divided into multiple structured modules that handle data collection, processing, prompt creation, response handling, and actuator management

Traditional IoT systems rely on simple command-based logic. A user might turn off a light or adjust a thermostat with a single-purpose app or sensor. These systems work, but they require users to spell out exactly what they want, often through multiple steps. Kalita’s approach adds a natural language interface powered by LLMs, enabling users to issue broader, more flexible commands such as “set up for movie night.” The system can then interpret that request and trigger multiple devices, such as dimming lights, turning on the TV, and adjusting the temperature.

### A modular, edge-first framework

To make this work in practice, the researcher presents a modular, edge-centric design. Instead of running LLMs on each IoT device, which is impractical due to their limited resources, the models run on a more capable edge computing device connected to the network’s gateway. This setup processes data locally, which reduces latency and improves privacy.

The system is divided into several modules. It starts with the IoT Data Collection Module, which gathers sensor data and user commands using MQTT, a lightweight messaging protocol. Next, the Data Processing Module formats and filters this input. Historical data is stored separately and can be used later to provide context for decision-making.

The real innovation comes from how the system creates prompts for the LLM. The Prompt Creation Module combines real-time sensor data with stored history to generate a structured prompt using a Retrieval-Augmented Generation (RAG) approach. This structured input helps the LLM deliver more accurate, situation-aware responses.

Once the LLM processes the prompt, the output is passed to the Response Handling Module, which parses it into a standard format. The Actuator Handling Module then sends the appropriate commands back to the IoT devices.

### Testing the approach in a smart home

To test this setup, the researchers built a smart home prototype using a Raspberry Pi 5 as the edge device. Three appliances, a light, TV, and fan, were connected using ESP8266 microcontrollers. Two LLMs were tested: LLaMA 3 (7B) and Gemma 2B. Commands such as “set the room for study” or “I want to sleep now” were issued through a text interface. The models interpreted the commands, generated JSON responses, and dispatched the right actions.

LLaMA 3 delivered responses with higher semantic accuracy but took significantly longer, up to 208 seconds. Gemma 2B was much faster, under 30 seconds, but occasionally misunderstood the command. This highlights the core trade-off. Larger models are more accurate but slower, while smaller models are faster but may need tuning for specific tasks.

### Expanding the use cases

The author also explores broader use cases. In industrial settings, LLMs could enhance predictive maintenance by interpreting complex sensor patterns. In healthcare, they could support real-time monitoring and personalized alerts based on wearable sensor data.

For communication efficiency, edge-deployed LLMs could reduce bandwidth use by generating compact, semantic descriptions instead of sending raw data. This would be especially relevant in 5G and future 6G networks.

### Security concerns at the edge

Security is a major consideration when LLMs control IoT devices. Chas Clawson, Field CTO, Security at Sumo Logic, told Help Net Security that the industry has long focused on protecting human identities, but must now apply the same rigor to non-human identities that can trigger physical change. He pointed out that research is improving guardrails to make LLM control paths safer and more deterministic, but that “we still need a trust but verify approach: wrap every action with monitoring, policy checks, and alerts to catch control failures or boundary violations.”

In practice, Clawson recommends centralizing log feeds for critical systems, adding detections for outlier behavior, and expanding telemetry beyond device logs to include prompts, model outputs, validator rejections, and actuator decisions as first-class audit events. He also advised shifting “further left” by monitoring changes to application code, model and RAG configurations, and deployments with a software supply chain mindset, to prevent unnoticed introduction of vulnerabilities.

The paper also cautions that challenges remain. Privacy is a concern when sensitive data is processed by LLMs. Local edge execution offers better privacy than cloud-based models, but also limits scalability. In critical environments such as healthcare or industrial automation, mistakes from an LLM could have physical consequences. The author suggests combining LLMs with rule-based systems and developing domain-specific benchmarks to improve reliability.

By combining LLMs with IoT networks at the edge, devices could respond to natural language commands in a way that feels intuitive and coordinated.

Instead of running LLMs on each IoT device, which is impractical due to their limited resources, the models run on a more capable edge computing device connected to the network’s gateway.

It starts with the IoT Data Collection Module, which gathers sensor data and user commands using MQTT, a lightweight messaging protocol.

In healthcare, they could support real-time monitoring and personalized alerts based on wearable sensor data.

Security concerns at the edgeSecurity is a major consideration when LLMs control IoT devices.

Anyone who has set up a smart home knows the routine: one app to dim the lights, another to adjust the thermostat, and a voice assistant that only understands exact phrasing. These systems call themselves smart, but in practice they are often rigid and frustrating.

A new paper by Alakesh Kalita, IEEE Senior Member, suggests a different path. By combining LLMs with IoT networks at the edge, devices could respond to natural language commands in a way that feels intuitive and coordinated. Instead of managing each device separately, a user could issue one broad command and let the system figure out the details.

Proposed framework is divided into multiple structured modules that handle data collection, processing, prompt creation, response handling, and actuator management

Traditional IoT systems rely on simple command-based logic. A user might turn off a light or adjust a thermostat with a single-purpose app or sensor. These systems work, but they require users to spell out exactly what they want, often through multiple steps. Kalita’s approach adds a natural language interface powered by LLMs, enabling users to issue broader, more flexible commands such as “set up for movie night.” The system can then interpret that request and trigger multiple devices, such as dimming lights, turning on the TV, and adjusting the temperature.

A modular, edge-first framework

To make this work in practice, the researcher presents a modular, edge-centric design. Instead of running LLMs on each IoT device, which is impractical due to their limited resources, the models run on a more capable edge computing device connected to the network’s gateway. This setup processes data locally, which reduces latency and improves privacy.

The system is divided into several modules. It starts with the IoT Data Collection Module, which gathers sensor data and user commands using MQTT, a lightweight messaging protocol. Next, the Data Processing Module formats and filters this input. Historical data is stored separately and can be used later to provide context for decision-making.

The real innovation comes from how the system creates prompts for the LLM. The Prompt Creation Module combines real-time sensor data with stored history to generate a structured prompt using a Retrieval-Augmented Generation (RAG) approach. This structured input helps the LLM deliver more accurate, situation-aware responses.

Once the LLM processes the prompt, the output is passed to the Response Handling Module, which parses it into a standard format. The Actuator Handling Module then sends the appropriate commands back to the IoT devices.

Testing the approach in a smart home

To test this setup, the researchers built a smart home prototype using a Raspberry Pi 5 as the edge device. Three appliances, a light, TV, and fan, were connected using ESP8266 microcontrollers. Two LLMs were tested: LLaMA 3 (7B) and Gemma 2B. Commands such as “set the room for study” or “I want to sleep now” were issued through a text interface. The models interpreted the commands, generated JSON responses, and dispatched the right actions.

LLaMA 3 delivered responses with higher semantic accuracy but took significantly longer, up to 208 seconds. Gemma 2B was much faster, under 30 seconds, but occasionally misunderstood the command. This highlights the core trade-off. Larger models are more accurate but slower, while smaller models are faster but may need tuning for specific tasks.

Expanding the use cases

The author also explores broader use cases. In industrial settings, LLMs could enhance predictive maintenance by interpreting complex sensor patterns. In healthcare, they could support real-time monitoring and personalized alerts based on wearable sensor data.

For communication efficiency, edge-deployed LLMs could reduce bandwidth use by generating compact, semantic descriptions instead of sending raw data. This would be especially relevant in 5G and future 6G networks.

Security concerns at the edge

Security is a major consideration when LLMs control IoT devices. Chas Clawson, Field CTO, Security at Sumo Logic, told Help Net Security that the industry has long focused on protecting human identities, but must now apply the same rigor to non-human identities that can trigger physical change. He pointed out that research is improving guardrails to make LLM control paths safer and more deterministic, but that “we still need a trust but verify approach: wrap every action with monitoring, policy checks, and alerts to catch control failures or boundary violations.”

In practice, Clawson recommends centralizing log feeds for critical systems, adding detections for outlier behavior, and expanding telemetry beyond device logs to include prompts, model outputs, validator rejections, and actuator decisions as first-class audit events. He also advised shifting “further left” by monitoring changes to application code, model and RAG configurations, and deployments with a software supply chain mindset, to prevent unnoticed introduction of vulnerabilities.

The paper also cautions that challenges remain. Privacy is a concern when sensitive data is processed by LLMs. Local edge execution offers better privacy than cloud-based models, but also limits scalability. In critical environments such as healthcare or industrial automation, mistakes from an LLM could have physical consequences. The author suggests combining LLMs with rule-based systems and developing domain-specific benchmarks to improve reliability.