Featuring Langfuse and W&B Weave Support, Ktor Integration, Native Structured Output, GPT-5, and More.

🎉 Koog 0.3.0 was about making agents smarter and persistent. 🚀

🎉 K

Featuring Langfuse and W&B Weave Support, Ktor Integration, Native Structured Output, GPT-5, and More.

Koog 0.3.0 was about making agents smarter and persistent. Koog 0.4.0 is about making them observable, seamlessly deployable in your stack, and more predictable in their outputs – all while introducing support for new models and platforms.

Read on to discover the key highlights of this release and the pain points it is designed to address.

🕵️ Observe what your agents do with OpenTelemetry support for W&B Weave and Langfuse

When something goes wrong with an agent in production, the first questions that pop up are “Where did the tokens go?” and “Why is this happening?”. Koog 0.4.0 comes with full OpenTelemetry support for both W&B Weave and Langfuse.

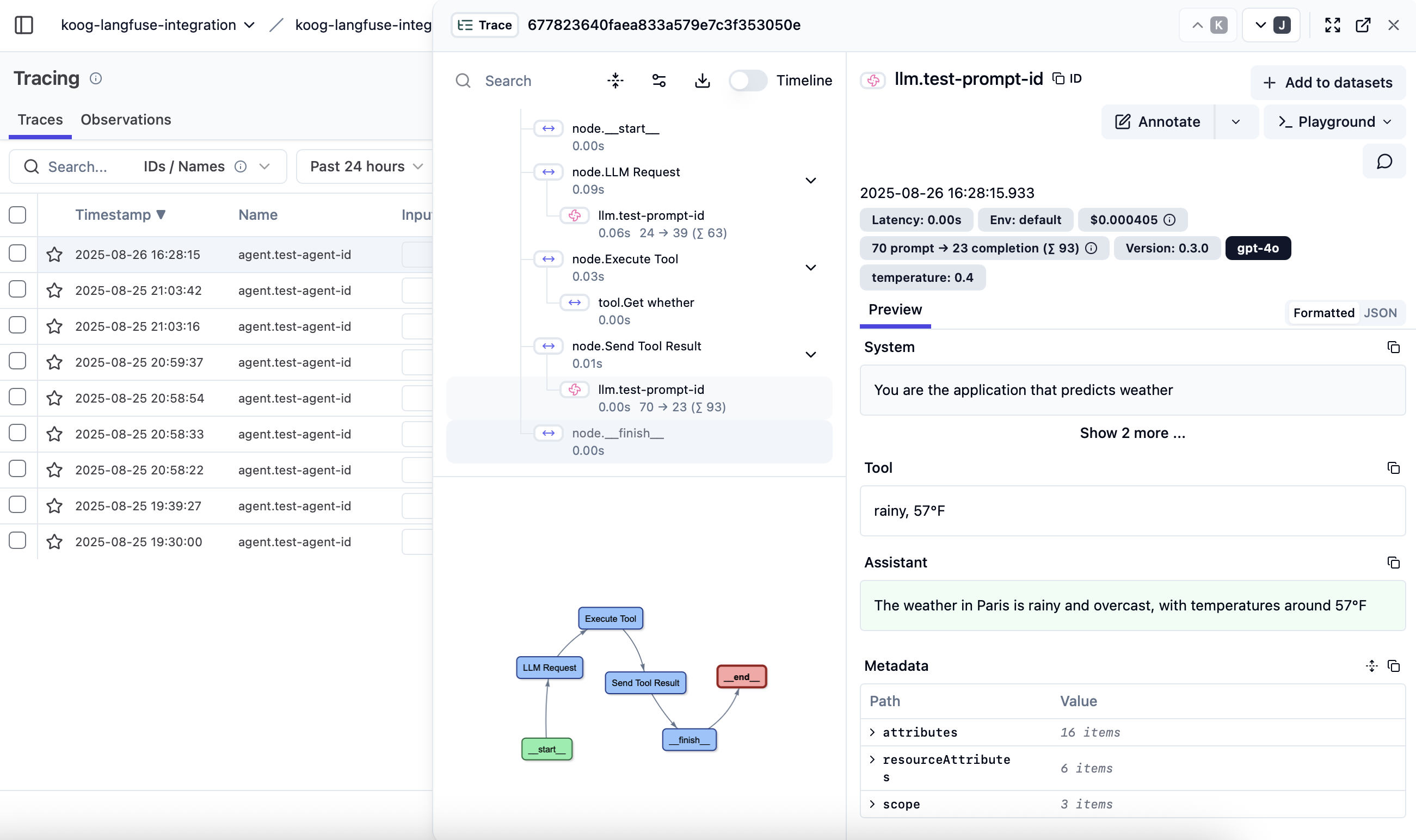

Simply install the desired plugin on any agent and point it to your backend. You’ll be able to see the nested agentic events (nodes, tool calls, LLM requests, and system prompts), along with token and cost breakdowns for each request. In Langfuse, you can also visualize how a run fans out and converges, which is perfect for debugging complex graphs.

W&B Weave setup:

val agent = AIAgent(

...

) {

install(OpenTelemetry) {

addWeaveExporter(

weaveOtelBaseUrl = "WEAVE_TELEMETRY_URL",

weaveApiKey = "WEAVE_API_KEY",

weaveEntity = "WEAVE_ENTITY",

weaveProjectName = "WEAVE_PROJECT_NAME"

)

}

}

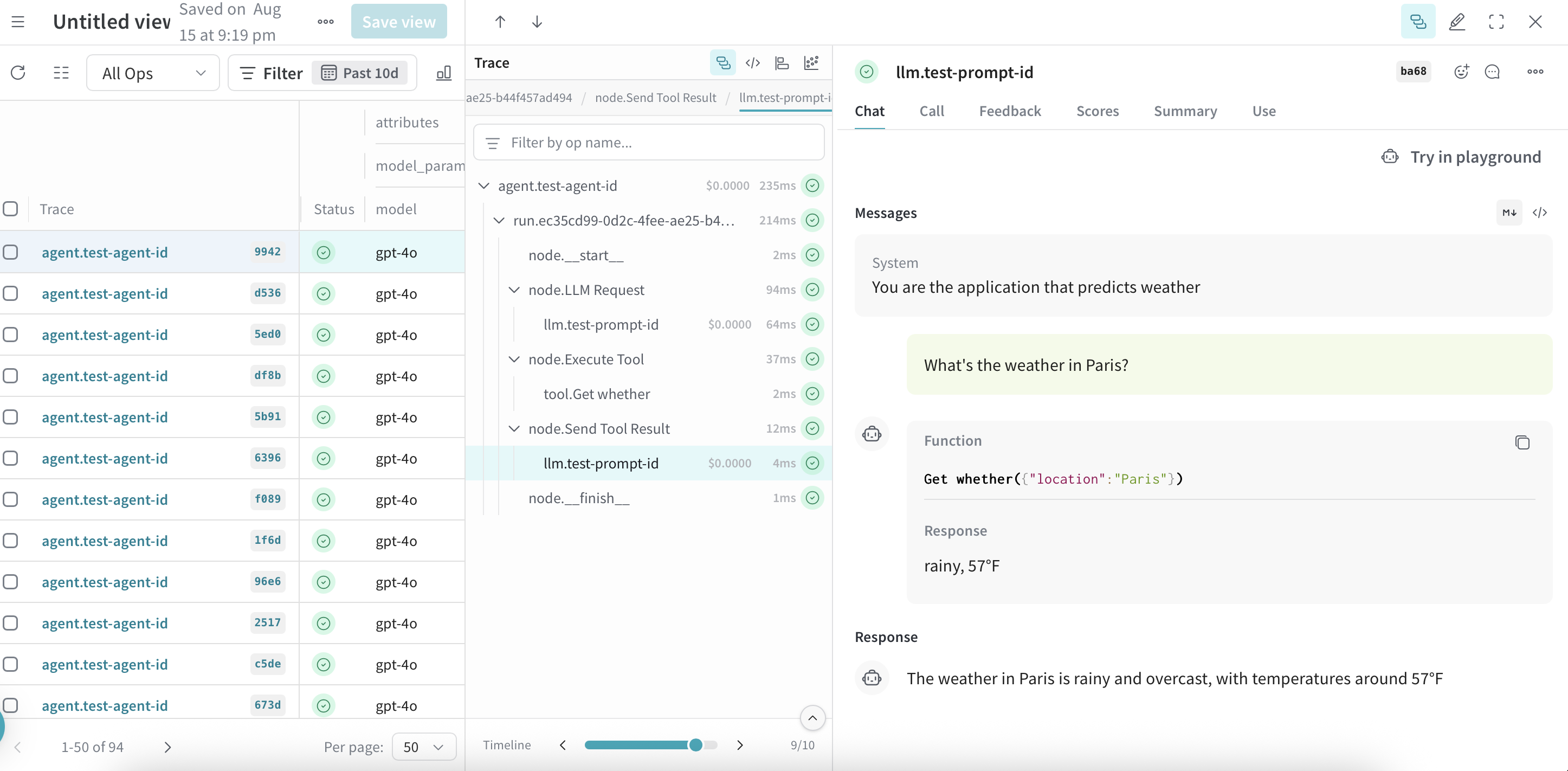

This will allow you to see the traces from your agent in W&B Weave:

Langfuse setup:

val agent = AIAgent(

...

) {

install(OpenTelemetry) {

addLangfuseExporter(

langfuseUrl = "LANGFUSE_URL",

langfusePublicKey = "LANGFUSE_PUBLIC_KEY",

langfuseSecretKey = "LANGFUSE_SECRET_KEY"

)

}

}

This allows you to see the agent traces and their graph visualisations in Langfuse:

Once everything is connected, head to your observability tool to inspect traces, usage, and costs.

🧩 Drop-in Ktor integration to put Koog behind your API in minutes

Already have a Ktor server? Perfect! Just install Koog as a Ktor plugin, configure providers in application.conf or application.yaml, and call agents from any route. No more connecting LLM clients across modules – your routes just request an agent and are ready to go.

Now you can configure Koog in application.yaml:

koog: openai.apikey: "$OPENAI_API_KEY:your-openai-api-key" anthropic.apikey: "$ANTHROPIC_API_KEY:your-anthropic-api-key" google.apikey: "$GOOGLE_API_KEY:your-google-api-key" openrouter.apikey: "$OPENROUTER_API_KEY:your-openrouter-api-key" deepseek.apikey: "$DEEPSEEK_API_KEY:your-deepseek-api-key" ollama.enabled: "$DEBUG:false"

Or in code:

fun Application.module() {

install(Koog) {

llm {

openAI(apiKey = "your-openai-api-key")

anthropic(apiKey = "your-anthropic-api-key")

ollama { baseUrl = "http://localhost:11434" }

google(apiKey = "your-google-api-key")

openRouter(apiKey = "your-openrouter-api-key")

deepSeek(apiKey = "your-deepseek-api-key")

}

}

}

Next, you can use aiAgent anywhere in your routes:

routing {

route("/ai") {

post("/chat") {

val userInput = call.receive<String>()

val output = aiAgent(

strategy = reActStrategy(),

model = OpenAIModels.Chat.GPT4_1,

input = userInput

)

call.respond(HttpStatusCode.OK, output)

}

}

}

🏛️ Structured output that actually holds up in production

Calling an LLM and getting exactly the data format you need feels magical – until it stops working and the magic dries up. Koog 0.4.0 adds native structured output (supported by some LLMs) with a lot of pragmatic guardrails like retries and fixing strategies.

When a model supports structured output, Koog uses it directly. Otherwise, Koog falls back to a tuned prompt and, if needed, retries with a fixing parser powered by a separate model until the payload looks exactly the way you need it to.

Define your schema once:

@Serializable

@LLMDescription("Weather forecast for a location")

data class WeatherForecast(

@property:LLMDescription("Location name") val location: String,

@property:LLMDescription("Temperature in Celsius") val temperature: Int,

@property:LLMDescription("Weather conditions (e.g., sunny, cloudy, rainy)") val conditions: String

)

You decide which approach fits your use case best. Request data from the model natively when supported, and through prompts when it isn’t:

val response = requestLLMStructured<WeatherForecast>()

You can add automatic fixing and examples to make it more resilient:

val weather = requestLLMStructured<WeatherForecast>(

fixingParser = StructureFixingParser(

fixingModel = OpenAIModels.Chat.GPT4o,

retries = 5

),

examples = listOf(

WeatherForecast("New York", 22, "cloudy"),

WeatherForecast("Monaco", 29, "sunny")

)

)

🤔 Tune how models think with GPT-5 and custom parameters

Want your model to think harder on complex problems, or say less in chat-like flows? Version 0.4.0 adds GPT-5 support and custom LLM parameters, including settings like reasoningEffort, so you can balance quality, latency, and cost for each call.

val params = OpenAIChatParams(

/* other params... */

reasoningEffort = ReasoningEffort.HIGH

)

val prompt = prompt("test", params) {

system("You are a mathematician")

user("Solve the equation: x^2 - 1 = 2x")

}

openAIClient.execute(prompt, model = OpenAIModels.Chat.GPT5)

🔄 Fail smarter – production-grade retries for flaky calls and subgraphs

It’s inevitable – sometimes LLM calls time out, tools misbehave, or networks hiccup. Koog 0.4.0 introduces RetryingLLMClient, with Conservative, Production, and Aggressive presets, as well as fine-grained control when you need it:

val baseClient = OpenAILLMClient("API_KEY")

val resilientClient = RetryingLLMClient(

delegate = baseClient,

config = RetryConfig.PRODUCTION // or CONSERVATIVE, AGGRESSIVE, DISABLED

)

Because retries work best with feedback, you can wrap any action (even part of a strategy) in subgraphWithRetry, approve or reject results programmatically, and give the LLM targeted hints on each attempt:

subgraphWithRetry(

condition = { result ->

if (result.isGood()) Approve

else Reject(feedback = "Try again but think harder! $result looks off.")

},

maxRetries = 5

) {

/* any actions here that you want to retry */

}

📦 Out-of-the-box DeepSeek support

Prefer DeepSeek models? Koog now ships with a DeepSeek client that includes ready-to-use models:

val client = DeepSeekLLMClient("API_KEY")

client.execute(

prompt = prompt("for-deepseek") {

system("You are a philosopher")

user("What is the meaning of life, the universe, and everything?")

},

model = DeepSeekModels.DeepSeekReasoner

)

As DeepSeek’s API and lineup of models continue to evolve, Koog gives you a simple and straightforward way to slot them into your agents.

✨ Try Koog 0.4.0

If you’re building agents that must be observable, deployable, predictable, and truly multiplatform, Koog 0.4.0 is the right choice. Explore the docs, connect OpenTelemetry to W&B Weave or Langfuse, and drop Koog into your Ktor server to get an agent-ready backend in minutes.

🤝 Your contributions make the difference

We’d like to take this opportunity to extend a huge thank-you to the entire community for contributing to the development of Koog through your feedback, issue reports, and pull requests!

Here’s a list of this release’s top contributors:

Nathan Fallet added support for the iOS target.

Didier Villevalois – added contextLength and maxOutputTokens to LLModel.

Sergey Kuznetsov – fixed URL generation in AzureOpenAIClientSettings.

Micah – added the missing Document capabilities for LLModel across providers.

jonghoonpark – refined the NumberGuessingAgent example.

Ateş Görpelioğlu helped with adding tool arguments to OpenTelemetry events